Or put another way ... directing application log messages into the OC4J logging system and viewing them.

Lets say you are wisely using some form of logging framework within your application. And when using OC4J, you use the LogViewer functionality within Application Server Control (ASC) to view the various log messages emitted by the susbsystems of OC4J. Perhaps, you think to yourself, I'd be quite convenient to also include the log messages from my application into the general OC4J log so it can be viewed from the same LogViewer.

Here's how it can be done!

I'm not getting in the religious argument as to which logging framework you are using. For pure expediency, my example will use the

JDK logging API.

OK, so in your application, you are using a logger naming hierachy of some form, and using the logger to issue log messages at different levels.

Logger logger = Logger.get("foo.bar.web.EmployeeFrontEnd");

public void doGet(HttpServletRequest request,

HttpServletResponse response) throws ServletException, IOException {response.setContentType(CONTENT_TYPE);

logger.fine(

String.format("Handling web request for %s", request.getRequestURL()));

PrintWriter out = response.getWriter();

out.println("<html>");

out.println("<head><title>EmployeeFrontEnd</title></head>");

out.println("<body>");

Employee test = Employee.getTestInstance();

logger.finest(

String.format("Test Employee Instance: %s", test));

logger.finest(

String.format("Calling %s to locate office for %s",

employeeManager.toString(),

test.identifier(test.ID_SHORT)));

String location = employeeManager.locateEmployeeOffice(test);

logger.finest(

String.format("bean returned %s for %s ",

location, test.identifier(test.ID_SHORT)));

out.printf("<p>Employee: %s</br>Office: %s</p>", test.identifier(test.ID_SHORT), location);

logger.fine(String.format("Employee currently earns $%s", test.getSalary()));

test.raiseSalary(15D);

out.printf("<p>Give employee 15percent raise, now earns %s", test.getSalary());

out.println("</body></html>");

out.close();

}

Cool.

Now if you are using standard JDK logging, you can configure the logging handlers and log levels using various mechanism, to ultimately direct the log entries from the application into some form of persistent form to view at a later point.

Now this is where the intersection with the standard logging API, OC4J and the ASC LogViewer intersect.

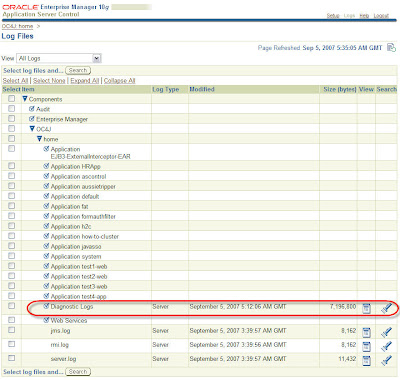

First off, the ASC LogViewer knows about all the log files that are generated by OC4J. Among them is the big daddy of log files --

j2ee/home/log/oc4j/log.xml -- this is known as the diagnostics log file.

How this file is constructed as a log target is done in the j2ee/home/config/j2ee-config.xml file, where the

oc4j-handler is configured to use the Oracle common logging mechanism:

<log_handler name="oc4j-handler" class="oracle.core.ojdl.logging.ODLHandlerFactory">

<property name="path" value="../log/oc4j"/>

<property name="maxFileSize" value="10485760"/>

<property name="maxLogSize" value="104857600"/>

<property name="encoding" value="UTF-8"/>

<property name="supplementalAttributes" value="J2EE_APP.name,J2EE_MODULE.name,WEBSERVICE.name,WEBSERVICE_PORT.name"/>

</log_handler>

Then by convention,, the

oracle naming hierachy is specified as being handled by this oc4j-handler:

<logger name="oracle" level="NOTIFICATION:1" useParentHandlers="false">

<handler name="oc4j-handler"/>

<handler name="console-handler"/>

</logger>

Thus any messages written into the "oracle" root logger will be directed to the oc4j-handler, which writes them out in XML form to the j2ee/home/log/oc4j.log.xml file.

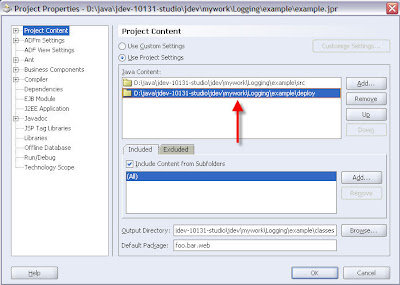

To therefore include log messages from your application in the OC4J diagnostics log file, all you need to do is to add a new

<logger> entry in the j2ee-config.xml file that specifies your logger name and the level, and declares it to use the

oc4j-handler.

<logger name="foo" level="FINEST">

<handler name="oc4j-handler"/>

</logger>

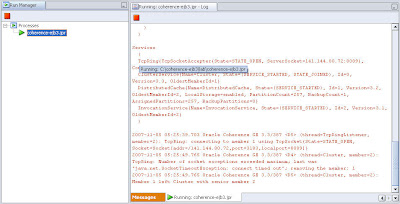

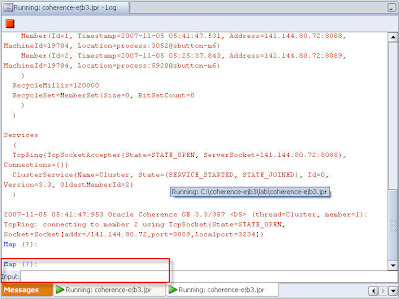

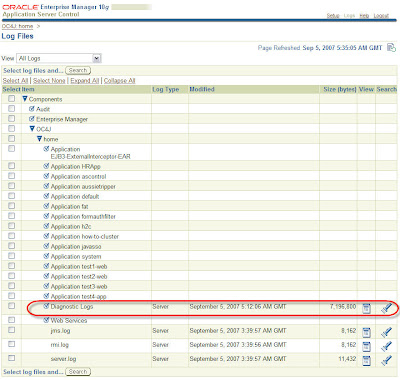

Now using ASC, select the logs entry at the bottom of the page to view all the logs.

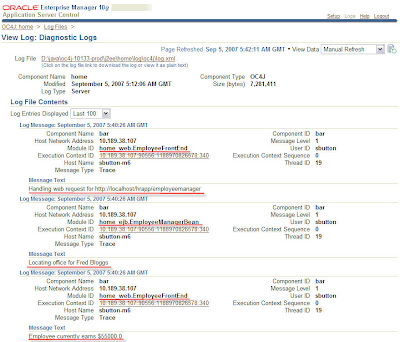

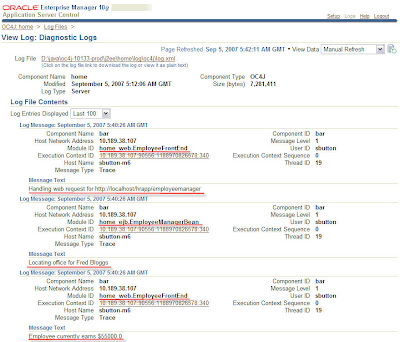

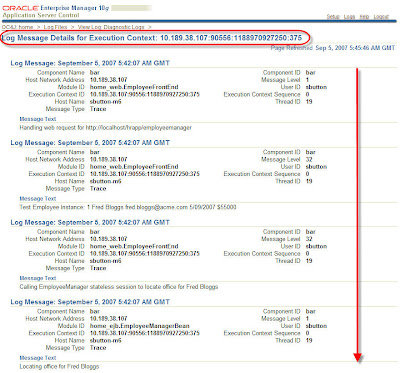

By clicking on the the Diagnostics Logs file, you should see it showing both log entries for OC4J PLUS the log entries from your application.

You can see from the log messages the component where the log entry was generated. From the example above you can see log messages from the web_EmployeeFrontEnd component and the ejb_EmployeeManagerBean.

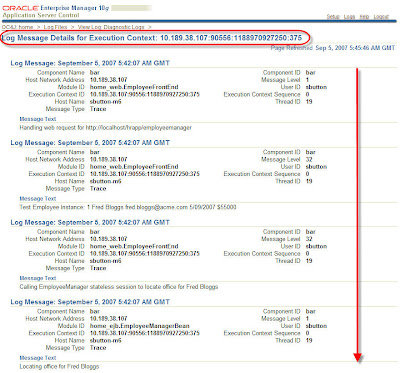

Now the really really cool thing you can do from here is to view all the log entries from the same execution path. Basically what happens is that the Oracle common logging mechanism allocates an execution context ID to every log message, which enables it to then correlate the various log entries from the different components of an execution path. By simply clicking on the Execution Context ID (ECID) link for a log entry of interest, all the log files will be searched for that ECID and each entry will then be displayed in time stamp order.

This effectively gives you the log entries, in sequence for an individual request.

How's that for a handy capability!

Once your log entries are being handled within the Oracle common logging mechanism, you can then utilize the search facilities within the LogViewer to search for items of interest. Explore away!

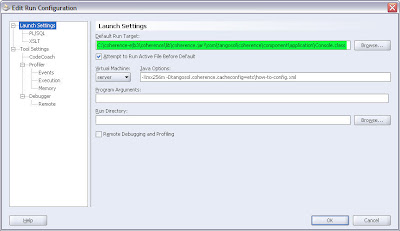

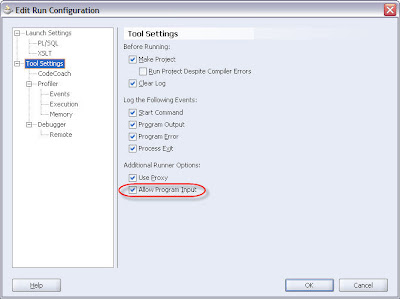

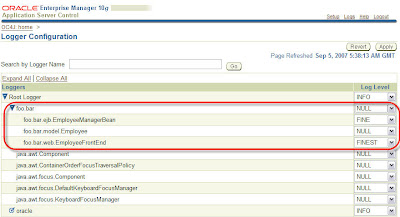

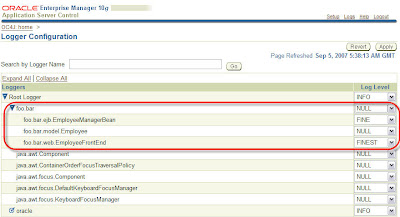

And for one final rabbit out of the hat for this blog entry, you can also use ASC to configure your application level loggers. On the

Administration page, select the

Configure Loggers link. If you application has run and your loggers have registered themselves (or you statically configured them in the j2ee-config.xml file) you will see the logger name listed, along with a select list to allow you to specify the levels for each logger. This lets you configure your custom application logging on the fly.

----------------

Listening to:

Pixies - Here Comes Your Man